Neography & Conlangs:

Constructed Information Exchange

#

What I want to explore on this page is the why someone might want to Construct their own language (conlang) - and/or make a new - or I guess you might say neo - script, and what we can learn from prior work. This includes everything from scripts and languages made for fantasy novels to programming languages, musical notation, and other fields that rely heavily on symbolic input methods. Then, because this site mostly caters to tech-centric audience, I want to tie it all together by looking through it all with a technology focused lens:

Some Linguistics Context #

At risk of turning this page into a linguistics lesson, I want to provide just a tiny bit of context and a mental nudge to recall that, yeah, language is incredibly diverse:

Sure, some languages may order things differently than English (orangeadj. catn. or gaton. naranjaadj.? ) or have grammatical gender, but there is a lot more to consider:

- What sounds are in the language?

- Does the language convey information in pitch or tone? (Like asking a question in English)

- Is this conveyed in the writing system (vietnamesetypography.com)?

- How many words for different colors are there?

- How precisely is time conveyed grammatically?

- Past, Present, Future?

- Far Past, Past, Immediately Past, Present, Immediately following, This day, This week, In this lifetime, Far Future?

- Are there any unfamiliar grammatical cases?

- Word length / Compounding

- Does the language go full German or Icelandic, or are words short and rarely to never compounded?

- Is the language written left to right, top to bottom?

Digital interopability with natural languages that arent Left-to-Right, Top-to-Bottom is spotty. Many programs and websites struggle with support, which is unfortunate.

Obviously, this is glossing over whole subjects and intricacies that linguists have spent lifetimes on and that I am absofuckinglutly (Hey, look at that, an infix) not qualified to talk about. My point is that there’s a lot of ground to be covered and explored for conlangs and neoscripts.

Additionally, I want to stress (pun intended) how important the International Phonetic Alphabet is. For the uninitiated, the IPA is like the weird symbols you’ve seen in dictionaries that tell you how to pronounce a word. For example, the word “knife” would be represented with nɑ́jf. This can be useful for everything from differentiating between accents and heteronyms (for example “tear”, like from your eye, is tɪɚ while a “tear” like in fabric is tɛɚ (at least for most English speakers)). Like any skill, it takes practice to be able to read … sounds? words? Mouth positions? … This is hard to talk about … written in the IPA, but it’s absolutely worth learning.

If you want to get into conlanging, neoscripting, linguistics, or even just want to learn a new natural language, understanding the IPA will make your life easier.

The fantastic YouTube channel languagejones has just started a series about how to learn the IPA at the time of writing, and if you’re here I imagine you’d enjoy following along.

The official chart of the IPA from the International Phonetic Association, CC BY-SA 3.0

Exploring Existing work #

I already have a page on this website about toki pona , one constructed language or “conlang” that I particularly enjoy. Being in its community I’ve been introduced to many interesting ideas in the conlang and the adjacent constructed scripts (conscript) communities - also sometimes called “neoscript”, I assume to avoid the existing usage of the term meaning to enlist in the armed services

In these communities, I have seen some absolutely unhinged ideas:

Of course, most of what’s on display here isn’t this ridiculous. Some of these neoscripts are for conlangs - fully new languages that need their own writing system as no existing system would even work.

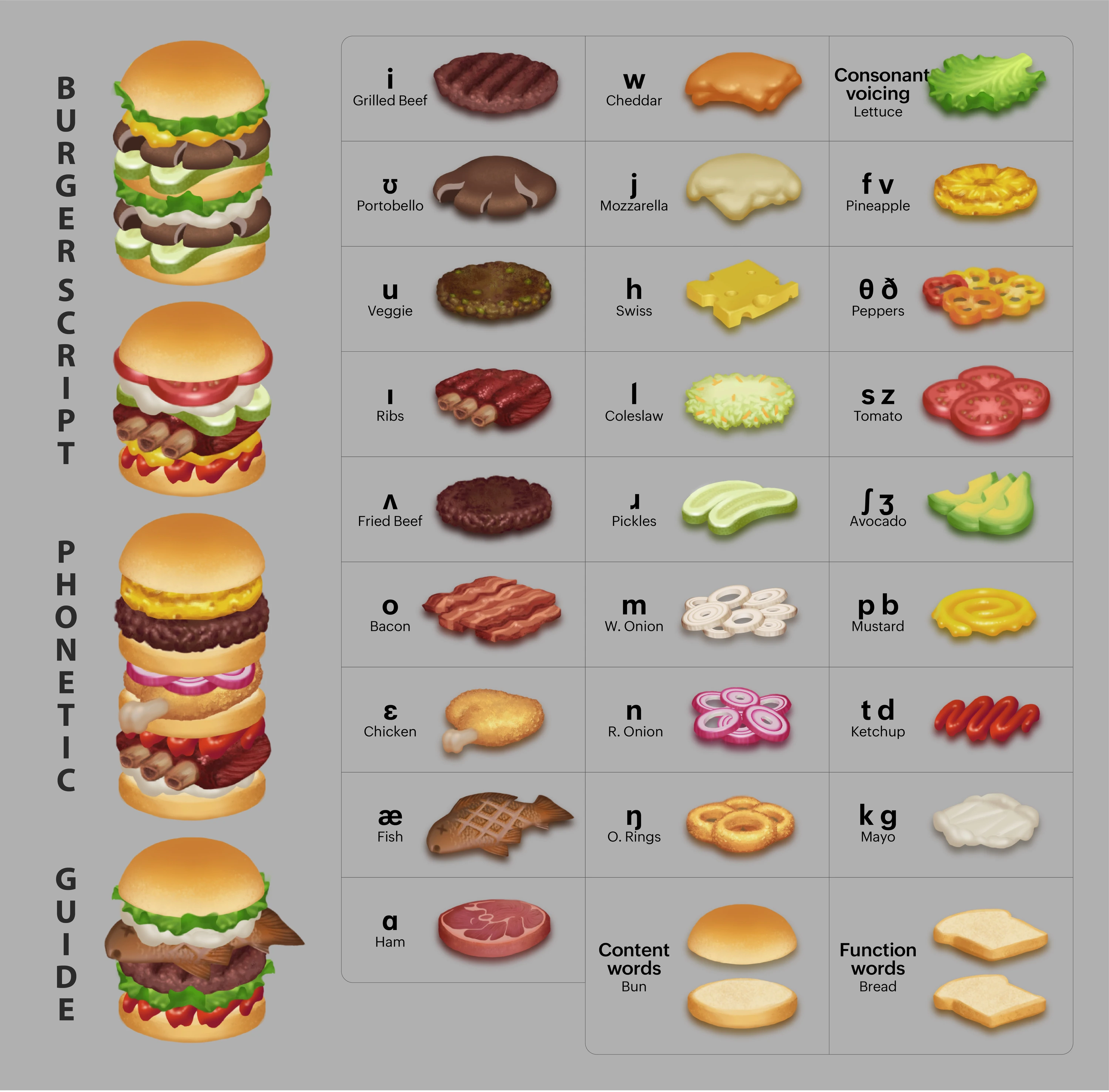

Some only make new symbols for existing alphabets - like BurgerScript.

Some dramatically challenge the idea of what it means to “write” something.

Some are used for building a world and culture in a fictional story: like Elvish in Lord Of The Rings.

Some are an attempt to make a writing system that fits an existing language better than systems used today.

Some are made to allow for sharing or concealing messages in mediums that wouldn’t normally be thought of as places thoughts could be recorded at all.

Some exist for the art of absurdity, with competitions to make the most cursed conlangs and neoscripts imaginable.

Conlangs #

There are a few categories of conlangs:

- Those that try to act as “a global language for all”, formally called “International auxiliary languages” or IALs

- “Engineered Languages” usually try to make a language that’s all about making it as good as possible given some restrictions or intended use case. Arguably, IALs fall into this category.

- “Artistic languages” are those made as art. Sometimes these are self-conained: The art is the language, often times they exist to make a world feel more fantastical. Often these are used for TV, novels, comics, or other entertainment media, but not always

You’re probably familiar with more art langs than you realized, some of the more commonly recognized include:

-

Quenya (Elvish), Sindarin (Elvish), and Khuzdul (Dwarvish) were made by J. R. R. Tolkien and used in The Lord of the Rings.

-

Dothraki and Valyrian by David J. Peterson, made for Game of Thrones, expanded into a full language from the bits in George R. R. Martin’s A Song of Ice and Fire.

-

Klingon by Marc Okrand, made for Star Trek

-

Naʼvi by Paul Frommer, made for Avatar

Of course, those only account for the very highest tip of the iceberg.

For the purposes of this page, I’ll mostly talk about engineered languages as it’s harder to talk about what makes a given art lang interesting without turning into an art snob.

Let’s look at a few languages quickly, just to see what exists:

- toki pona - The language with only ~130 words. It puts all of its points into minimalism and works much better for real conversations than you’d think.

- Esparanto - The grand daddy of all International auxiliary languages, it now has 60,000 speakers.

- Kēlen - An attempt at a verb-less language. It also has a beautiful ceremonial alphabet that uses knotwork to hold meaning.

- Lojban - An attempt to make a language without syntactic ambiguity - that is, any sentence can only mean one thing. Fixing the Lesbian Vampire Killers problem. Are they?

- Lesbians that kill vampires.

- Killers of lesbian vampires.

- Lesbian vampires that are killers.

Of course, I can’t reasonably explain how each of these languages works or why they’re interesting in only a few sentences, so I encourage you to click on those links!

Moving on, let’s look at some scripts. To get started, I want to explain what kinds of writing systems exist then look at scripts that have particularly interesting applications - that is, scripts which exist because the typical writing systems don’t work well where they’re used.

Types of Writing Systems #

Imagine you’ve sat down to make a writing system for a spoken language, but you know nothing about other writing systems. There’s a few approaches you might take. You might assume:

- Each sound should have a symbol (A Segmental system)

- Each Syllable should have a symbol (A Syllabic system)

- Each word should have a symbol (A Logographic system)

You could, of course, mix some of the above - many natural languages do. You also might decide to leave some things out all together. For example, an Abjad is a writing system where the alphabet omits vowels. Ths wrks bttr thn yu mght thnk, and some natural languages do this (Arabic, Hebrew, Syriac).

If you want more in depth reading, see https://neography.info/writing-systems/.

Why look at both? #

While it’s possible, and quite common, to only make a new writing system (using an existing language) or make only a new language (using an existing writing system) the two are still linked quite deeply. In part this is because if a language has a phonetic inventory (the list of sounds it uses) not seen in a natural language it may be easier to make a new script than not.

Beyond that though, most people with the creativity to make language - particularly if it’s an artlang - want to have the vibe of the script taliored to reflect the vibe of the language.

(Somewhat) Practical Neoscripts #

There’s one of these you probably already know of, as you’ve probably seen a fair number of 7-segment displays in your life, and you’ve probably seen them used to represent Latin characters:

Now, you could probably debate if this is a new script or just a font, since clearly the intent is to show Latin characters - but because of the limitation being so severe, I think it might count, albeit barely. This is an important distinction though, because we don’t want to consider every different font a whole new writing system!

So, more true to the actual spirit, lets start with HexCasting, a Minecraft mod which requires the user learn to write spells into this hexagonal grid,

Okay, neat, sure, but maybe it’s a contrived example. It’s solving a problem that could’ve been done by just letting the user type out commands (albeit that would be less fun). So, what about working around a real limitation…

Pixelscript exists for people working on pixel art. At extremely low resolutions, there’s just not room to add a normal signature and have it be legible anyway

![]()

From omniglot.com. Text is “All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood.” which is Article 1 of the Universal Declaration of Human Rights

Of course, there are other cases of fitting in writing when the tools or space for doing so are limited. A good example of this is Nailscript:

Again from omniglot.com, and again this is Article 1 of the Universal Declaration of Human Rights

It simplicity in construction, compactness, and use of the 3rd dimension as nails lay over one another really pushed my intuition for what a script could be.

When I tried writing my name I did realize you might want to wear ear protection when using this script!

Decisions, Decisions… #

Languages and scripts have to make certain trade offs. None of these necessarily make any language or script “better” than any other, but if you have a goal in mind, there are areas where designing either to meet those goals may be difficult:

- Flexibility & Precession - Can you say what you actually mean?

- This can be a lack of vocabulary

- Ambiguity - Can what you’ve said/written mean more than one thing?

- This includes everything from the existence of sarcasm to homophones

- Cultural differences - Will someone from a different culture still get the same meaning?

Of course, none of these are strictly bad things.

- Maybe you want a language that prioritizes simplicity, like Toki Pona

- Maybe you want a language that’s full of double entendres for writing poetry or lyrics

- Maybe you want cultural differences to be embedded in a language to make a point about two groups of people in a story.

Flexibility & Precession: Music Notation as a proxy for language design #

Sometimes people will say that “Musical notation is a universal language among musicians” which, given we’re talking about languages, you should be able to see the problem here: We don’t think of <Your Native Language Here> as a perfect-for-all-uses, universal language.

It might be true that most musicians can read musical notation, but that doesn’t mean it’s well suited to representing their art. It’s great fine if you want to convey the idea as expressible by the instruments of western traditions for classical arrangements, but it’s not universal, as I’m sure anyone who plays music with microtonal elements, complex timings, or where the timber of the instrument can be adjusted over time can tell you.

More over, reading music is a bit difficult to learn. This has lead to the “universality” of the notation being a lie as most any guitarist will be familiar with tabs:

e|---------------------------------------------------------------| B|---------------------------------------------------------------| G|-----------------------------------2---------------------------| D|------------------2------4---5--4-------5---4--------2---------| A|---------------------------------------------------------------| E|---------------------------------------------------------------| e|---------------------------------------------------------------| B|----2--3--2--2-0-----------------------------------------------| G|--4--------2-------------0--2------0---------------------------| D|-------------------0---2------4--2---4--2-4--------------------| A|-----------------2--------------------------5---7--------------| E|---------------------------------------------------------------|

Part of the theme for the Halo games, ripped from ultimate-guitar.com, which is a dumpster fire of a website which I will not link directly to.

Which can have various flavors of markings, including using ~ for vibrato, putting X’s above the lines for palm mutes, / for sliding from one fret to another, etc. It’s a bit crude compared to sheet music and requires your guitar’s tuning match the one listed, but it’s quite easy to read.

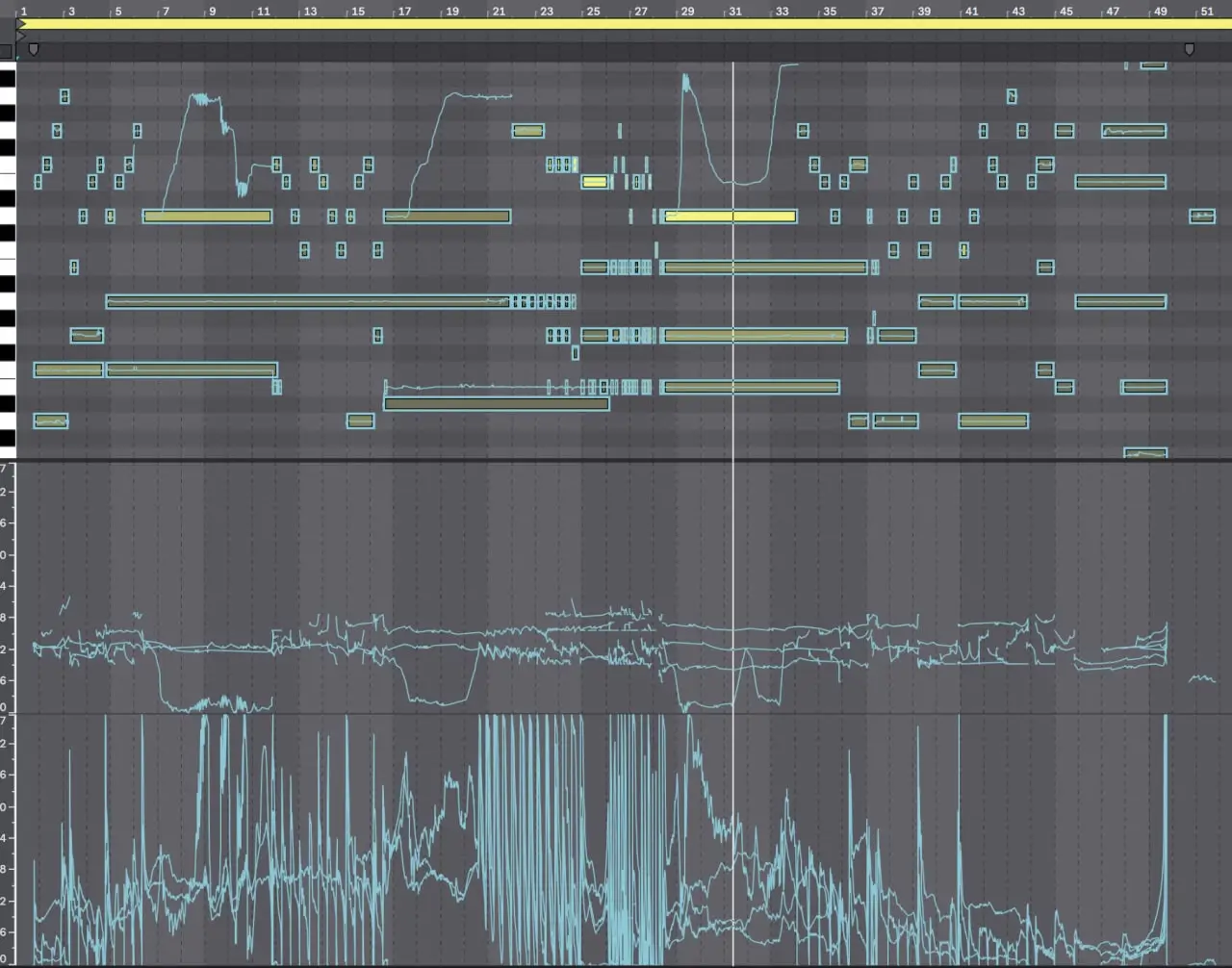

But music today is often made in a piano roll,

and has historically been made in trackers,

![]()

In either case, the notes can carry a lot of added information - pitch bend (now sometimes per-note) and multiple data streams to represent the virtual turning of knobs. That interface is a writing system of sorts - you could even argue different input devices and instruments, as long as they’re just giving a computer digital data, are really just different writing utensils.

Of course, each has different tradeoffs: You wouldn’t want to try to read a printed off piano roll or tracker sheet for performance!

[Click to Expand]: Tangent: The Musical Equivalent of Conlangs

Just like conlangs, there have been some efforts to make new music notation systems. Hummingbird and Clairnote both make changes (which may be good or bad, depending on who you ask) to the existing common music notation system, but don’t really attempt to totally reinvent the wheel.

If these are conlangs, they’re not even as different as Iqglic (pronounced the same as “English”) is from English, though.

Dodeka goes a bit further, but mostly feels like an advert for a piano that just flattens the keybed. Regardless, I think there is value in at least trying to make a system easier to read and write even if it doesn’t improve the overall flexibility.

I should also point out there are other musical notation systems from cultures I am less familiar with - just like I’m not experienced in natural languages outside of English. The Musical Notation Wikipedia page shows some of these, but I’m not even sure how to go about research most of these in depth. This also ties into how the term “music theory” isn’t very good at describing modern music so much as the harmonic style of 18th century European musicians (Adam Neely, YouTube) - If you’ll forgive the tangent going yet another level deeper, the same issues we have with other languages going extinct will inevitiblely come to these musical notations too.

To the point: It seems like the “shared, universal language” isn’t all that shared or all that universal - let alone flexible enough to convey what we need.

I’m also not going to pretend that I know enough to take my own crack at making something that’s better outside of maybe one niche use case. Tantacrul recently made a video where he very persuasively argues that while what we have has it’s flaws, it’s currently pretty much the best option there is for anything off a computer for most instruments, but that Tabs and the other systems that have evolved certainly have their place too.

[Click to Expand]: A tiny bit more for this music tangent

I’m not sure what a new language for expression music would like nor do I know how software for writing it would maintain a reasonable degree of usability, but I did find some interesting existing tools that give it a good shot:

- Karya “One way to look at it is a 2D language for expressing music along with an editor for that language.”

- Jird “Jird is a little language for writing and hearing music in just intonation. It uses ratios to express frequencies, durations, and volumes.”

Somewhat related, SMuFL is standard used to display musical notation - it’s basically just a really clever way of (ab)using fonts and I think it’s neat.

There are an absolutely WILD number of crazy musical notation and sequencing systems because the standard musical notation can’t do everything. For the normal roles that sheet music has, yep, pretty great. But just like how we might want the piano roll or tracker to make music (and how that interface changes the end result) we may want other languages because no one language can be infinitely flexible and work for every use case.

The take away I want you to get is that, just like with musical notation, a language has to make compromise in which ways it’s flexible vs precise. If you can technically represent everything you may find it hard to say some things in an elegant way, but if there are some things you want to say and there’s no way to convey them at all, that may also be a problem.

Ambiguity: Emoji + Dialects #

Okay, but, what about Emoji? 🤔

Well, yeah, they’re a writing system. They may not be incredibly precise if the exact symbol you need doesn’t exist, but they can be rather information dense.

👔💼🔜🏠👉👌 👨❤️💋👨 🍑🍆❓

Is a pretty clear message.

Clearly talking in only emoji wouldn’t really be ideal, but it’s still wrong to discount it outright as a conlang/writing system (or would it be a natlang?)

But, it does have a massive problem: it doesn’t display consistently on different platforms. It boggles my mind that it’s a crap shoot for if 🏳️⚧️ is a trans flag, or a trans symbol followed by a white flag (⚧️🏳️). Pride or surrender? Similarly, the Windows operating system is cool with gay pirates, 🏳️🌈🏴☠️, but no country flags will render correctly (though it is possible your browser will compensate, here’s a US flag: 🇺🇸). More complicated yet, will the Taiwan flag (🇹🇼) render if you’re in China? Doubtful.

This means the medium itself isn’t reliable AND everything that’s said has to go though a sort of extra look-up in the reciving party’s brain to try to parse the intended meaning of the message.

We can more-or-less equate this with dialects or jargon. If you read the word “Biscuit” you may be thinking of a cookie or a scone depending on which side of the Atlantic you’re on.

Cultural Differences: Tone markers & txting styl #

If I’m in the car with someone and they ask me to send a text for them, I get uncomfortable because I don’t know how they text.

Think about texting someone young that you’ve texted every day for years, they probably don’t normally put full stops/periods at the end of sentences, so when they text

Hey. We need to talk.

That’s a different text from

Hey we NEED to talk

Which is different from

hey we need 2 talk…

I think most people would find any of the above alarming, but 1. probably has you going “Ah shit.” while 2. has you frantically trying to figure out why and hoping that it’s not something you did, while 3. might have you concerned about their happiness. Tom Scott has a good video on this.

Some languages do have honorifics and formality - such as tú vs usted in Spanish or how Korean can even change verb conjugation,

I really hate languages that do this in this particular way. The common rule of this traditionalist respect your elders attitude directly conflicts with my strongly held beliefs that people earn respect independently of position of power or age. Hell, I’m likely to respect some one in a position of power less than one without it unless they’ve proven to me that they’ve earned that position rightfully.

but a conlang could do something new and instead encode something like the speaker’s view of the listener into the verb. For example, there could be a new punctuation mark, lets make these up real quick as a very low effort bodge onto English:

- 𑙧 → The speaker is experiencing a high energy negative, emotion in relation to the primary action

- ⁝ → The speaker is experiencing a low energy negative emotion in relation to the primary action

- ᥄ → The speaker is experiencing a low energy positive emotion in relation to the primary action

- ! → The speaker is experiencing a high energy positive emotion in relation to the primary action

Now, let’s make some new sentences:

- I woke up late this morning𑙧

- The speaker is grumpy, presumably having missed something by over sleeping

- I woke up late this morning⁝

- The speaker is bummed to have lost part of the day

- I woke up late this morning᥄

- The speaker is thankful to have slept in

- I woke up late this morning!

- The speaker has more energy than usual and has extra for the day some “bring it on” attitude.

Could there be ambiguity in these? Of course. It’s also likely people would either be lazy and not use them (defaulting to just a period) or would use other “hacks” like we already do to imply sarcasm or tone, ranging from the less-than-ideal tone markers (Which work absolutely perfectly /s) or just quoting out a word like in “You sure do have ‘interesting’ taste in romantic partners”. These also don’t cover every option or even fully fix the problem in the “Need to talk” example - to do that we’d probably need to double or triple the number of punctuation options to indicate if it’s directed at the listener, a 3rd party, or strictly internal - at which point it probably makes more sense to make verb conjugations instead of attaching it the sentence as a whole…

… have I mentioned that language is complicated?

Cultural Differences Bonus Round: Gender #

He/Him She/Her Xe/Xem/Xyr Ze/Sir Fae/Faer They/Their …

Different people have a different level of acceptance of different pronouns… because something-something culture war? Regardless, conlangs may provide different pronouns, often either adding one for non-binary individuals or just using a unified, un-gendered pronoun.

If this sounds difficult, just remember, the correct way to refer to someone in any language is whatever they want you to use, just like in English!

If you want a deep dive into pronouns, I recommend this video from Language Jones (YouTube).

If you’re interested in some of the data relating to how these pronouns are used check out https://www.gendercensus.com/results/2022-worldwide/

Why Someone Would Make Their Own Language (or script) #

Before we get in to any of the concrete reasons, it’s important to acknowledge someone may make a conlang or neoscript for no other reason than the joy of the act of creating, as a joke, or just as personal exercise - all of these reasons are still 100% valid. Still, most people will have an end goal in mind or a challenge in the design. Let’s look at some motivations.

Hiding Messages #

Hiding messages is an art form of itself, and can have a myriad of different requirements:

- Maybe you want any onlooker in the know to be able to decode the message

- Maybe you want it to be difficult to see there’s a message at all

- Maybe you want it to be obvious there’s a message and make it difficult to decode

This does start to go into a conversation about steganography (Wikipedia).

Steganography is the practice of representing information within another message or physical object, in such a manner that the presence of the information is not evident to human inspection. In computing/electronic contexts, a computer file, message, image, or video is concealed within another file, message, image, or video.

To avoid losing the topic of this page though, there are a few scripts which fill this function. Babuk, for example, can be used to hide messages in a game of Go.

Arguably Morse code could be lumped into the “for hiding messages” category too, but it’s often pretty obvious.

Better Serving Disability #

Vision: Braille & Screen readers #

Screen readers #

I don’t have much to say about screen readers as I have little experience with them; however, it’s notable that text-to-speech engines are rapidly advancing and this may make them better at handling uncommon inputs and conveying emotion or stress, especially when content authors properly mark up their content to support it. The AI boom may have its downsides, but I’m all for better assistive tech!

Braille #

It’s not reasonable to expect that people with vision disabilities always have their ears occupied by whatever they’re reading or be listening out loud and potentially disturbing others. Also, it’s not exactly practical to have every sign include an embedded speaker repeating the same word forever. So, braille exists.

Braille is mostly just a font, but there are some special rules, mostly for contractions to make common words shorter.

a-z0-9: ⠁ ⠃ ⠉ ⠙ ⠑ ⠋ ⠛ ⠓ ⠊ ⠚ ⠅ ⠇ ⠍ ⠝ ⠕ ⠏ ⠟ ⠗ ⠎ ⠞ ⠥ ⠧ ⠺ ⠭ ⠽ ⠵ ⠚ ⠁ ⠃ ⠉ ⠙ ⠑ ⠋ ⠛ ⠓ ⠊

Being that I’m not vision impaired and that I can’t read braille, it’s hard for me to know what is good or bad about it, but, I do know there’s a real problem with the cost of braille displays. Even the least expensive option I could find was ~$700, but that one only displays 20 characters at a time. I also don’t know if there are programs to make these devices more affordable. If you know more, please reach out to me! I’d love to hear about your experience.

I had the probably-too-obvious question of why not just not use a single character “display” that changes under the finger - or even better - let it encode to each finger, resting on the keyboard, like “bumping” the key in the home row? From what I gather, it’s a bit too hard to feel it when it changes dynamically compared to being swiped across the finger? I did find someone else had the same idea back in 2007, but it doesn’t look like it went anywhere.

While I don’t think it’s overly practical, I do like the concept behind this typeface which can be read as braille or Latin characters.

Hearing Impaired: Sign Languages #

There is a common misconception that sign languages are somehow dependent on spoken languages: that they are spoken language expressed in signs, or that they were invented by hearing people. […] Instead, sign languages, like all natural languages, are developed by the people who use them, in this case, deaf people, who may have little or no knowledge of any spoken language.

As a sign language develops, it sometimes borrows elements from spoken languages, just as all languages borrow from other languages that they are in contact with. […]

On the whole, though, sign languages are independent of spoken languages and follow their own paths of development. For example, British Sign Language (BSL) and American Sign Language (ASL) are quite different and mutually unintelligible, even though the hearing people of the United Kingdom and the United States share the same spoken language. The grammars of sign languages do not usually resemble those of spoken languages used in the same geographical area; in fact, in terms of syntax, ASL shares more with spoken Japanese than it does with English.

Of course, there are constructed languages with sign languages where there is a stronger tie though. The example I’m most familiar with, and have seen people conversing in, is luka pona by jan Olipija which is derived from the previously mentioned toki pona .

And, just like with non-sign based conlangs, there’s attempts to make an International Sign Language which looks about as successful. International Sign isn’t totally a conlang though, as it’s more of a Pidgin.

- oh, right, Pidgin:

Fundamentally, a pidgin is a simplified means of linguistic communication, as it is constructed impromptu, or by convention, between individuals or groups of people. A pidgin is not the native language of any speech community, but is instead learned as a second language.

Bridging the worlds of conlangs and sign languages a bit further, the most fully developed equivalent to the IPA for sign languages - the Sign Language International Phonetic Alphabet, or SLIPA - was developed by David Peterson, the same David Peterson that made Dothraki and Valyrian.

Same language, new system #

Intriguingly, some neoscripts use an existing language but instead of just mapping the letters to new symbols change the way we think about a ‘character’ all together. Often this is to make the spelling and sounds more consistent with eachother.

Example text in Fontok. Again, this image is from omniglot and is Article 1 of the Universal Declaration of Human Rights

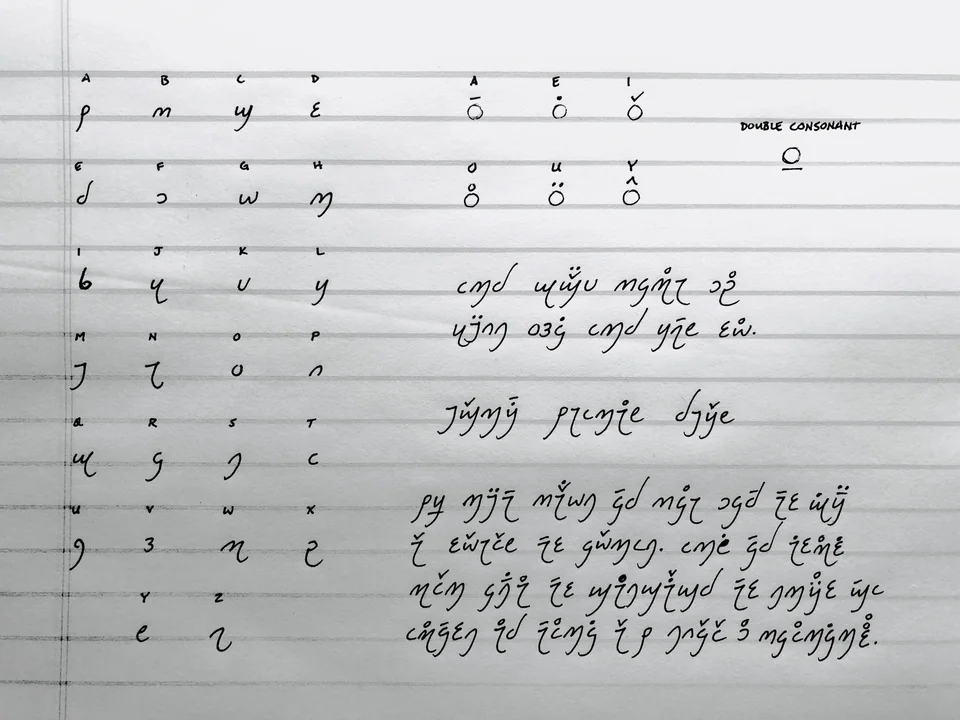

Of these, I particularly like fontok which adds a significant number of characters as each sound (not character) in English is given its own symbol. Of course it doesn’t hurt that text written in fontok is breathtakingly beautiful. Similarly, I’m a big fan of this unnammed script by u/mastefka

Of course, if you go looking you’ll find many others. These vary in their intent. Some writing systems aim to be international, mostly by giving a symbol to every letter in the IPA. Clearly, this comes at the cost of adjustments that best suit any given language.

World Building & Art #

I suspect that almost all who stumble into the world of conlangs and neoscripts is first introduced to their existence though works of fiction where a constructed language plays a big part - be it on of J. R. R. Tolkien’s from the Lord of the Rings trilogy and related works, such as Quenya (which is written in Tengwar),

This font is Fairfax HD by KreativeKorp, which is licensed under the open font license It is used for all of the conlang displays on this page which are not static images. Some of the examples on this page are also copied directly from that page.

or Orwell’s Newspeak.

In The Art of Language Invention by David J. Peterson - the guy who made Dothraki for A Song of Ice and Fire / Game of Thrones - discusses how Dothraki wasn’t just a jumble of foreign looking language ideas but was informed by the culture of its fictional speakers and built up around their ideas and values. This same attitude applies to the script which is on omniglot as well. This is a pretty common among nearly all languages made for world building: like natural languages, they’re influenced by the culture of the people they’re invented to be used by.

I find it interesting that this can be true for both scripts and languages.

Languages can evoke cultural context in many ways, but some of the easier to consider points include:

- How are different classes of people portrayed?

- Are any/all genders and species treated equally in language?

- Are classes of people (slaves? kings?) given less expression (ex: no gender for slaves)

- Are there words for specific good or bad things that don’t exist in natural languages because they’re not very common?

- For example in a fantasy novel with magic, maybe people feel a different kind of exhaustion when they’ve used a lot of magic. This may use a new word rather than “tired” that more directly states the cause and sideeffects.

- Has the language adapted around some large event as a time base?

- For example, an extra tense could be added that’s specifically for before, after, or during some major war.

Scripts can be reflective of the culture’s priorities. Do they value something easy to write due to a lack of easy tool use? Scar, for example, looks to be a good option for creatures lacking the dexterity afforded by fingers. Does the language need to be used by both machines and biological life? 12480 might work for that.

Or, a script can intentionally mislead for artistic reasons:

Chiri was invented by Jay Tamar for the webcomic “Passing Human”. […] The language juxtaposes a flowing script with a harsh sound to represent the beautiful surface but deadly interior of the Chiri society.

- Chiri on Omniglot.com

Of course, even for humans just having a new script and language alone can go a long way.

Interestingly, even the discussion of language itself can be moving and artistic, as anybody who has seen Arrival (2016) can attest to,

Arrival Spoiler: The movie revolves around humans trying to communicate with aliens that can only talk by making these floating ink rings.

More fundamentally though, a script with meaning only to the author can still be beautiful. The calligraphy and attention to detail can still be inspiring even if the viewer doesn’t know the full meaning. Hell, sometimes not knowing the meaning can be a statement in itself - making a script that looks like an existing language for commentary on a culture isn’t unheard of. Some artistic pieces will use a new writing system just because it’s beautiful, and that’s enough.

Conlangs aren’t restricted to use in the pages of stories or on the screen either. If you look for it there is an astonishing amount of incredibly well produced music made in many different conlangs.

My favorite conlanging musician is jan Usawi. They release some absolute bops that are sung in toki pona:

On top of this, some tools which effectively have their own language for interacting with them can inspire new art because of the limitations or paths of least resistance of the language.

I think it would be a disservice to my readers not to mention just how unhinged some artlangs can get. Some of the most interesting I’ve seen are:

- Mpiua Tiostouea: “The language of time travelers”

- tiduna,xal: A language with only adjectives where speakers require a potentially infinite amount of mouths.

Both of which I’ve embedded the video showcases for here. Additionally, I thought these were quite neat:

- G̊riǵe T̊s’́ê’êt̊i: A language spoken that can be spoken without a voice box

- θͬæͬɐ̃ͬχ̓ɤ̞̃ɐ̃ͬlͬ: 🥐🥖

What We Can Learn #

Domain Specific ~Languages~ #

In programming, there’s this idea of domain specific languages. These are programming languages that don’t really do everything - instead they do one thing really well.

Why are there different programming languages anyway?

I realize that not everyone reading this may know a programming language so you be a bit confused as to what makes a language “domain specific” or even what it would mean for a programming language to not be domain specific.

For general-purpose programming languages - that is, languages which are meant to be able to be used to solve most any problem there’s a whole host of reasons a programmer may pick one over the other, but more often than not the reason that dominates is where will the code be running.

For example, if you want to write code that runs on the web, you’re more-or-less stuck using JavaScript because it’s the only language that can modify elements on the page. If you’re writing code to run on a small microprocessor like would be in a smart-lightbulb or in your mouse or keyboard, that will probably be written in C because it’s likely the only one that has official support for generating instructions the processor understands.

Assuming you still have more than one option after deciding where the code will run, you might be limited by other restrictions such as:

- How fast does it need to run?

- For example, you may have to use a harder-to-write but faster-when-running language like C, C++, Rust, or Go because you need to crunch a lot of data.

- Do you need to use code written by others?

- For example, AI stuff is mostly stuck in Python because other AI stuff is in Python

All of this is to say there are different languages because there’s trade off to be made. Some prioritize speed of execution, some prioritize being easy to write and read, some prioritize compatibility with many systems.

What makes domain specific languages different is they’re focused not on where they run or how fast they run (although they’re usually quite fast) but instead on what data they process. There are DSLs for checking if text matches a given pattern, drawing diagrams, describing how hardware logic should be wired up, or - as mentioned above - describing a musical compositions so that it can be rendered to sheet music.

Typically - but now always - DSLs are embedded into a general purpose programming language to do the heavy-lifting where doing it with the more general purpose language would be much more work.

The most famous two are regex (Wikipedia) for processing text and SQL (Wikipedia) for working with databases. I think you can apply that idea can apply in other niches too. If you think about speech vs writing vs signing vs braille and how each can have multiple co-existing (or competing) standards / systems, it’s really not a stretch of the imagination.

My friend jan Usawi recently made a fantastic language called

Rhapsodaic

which is detailed on this website, but as a TL;DR: Rhapsodaic, as a core design philosophy, values preserving emotional meaning over all else… and I mean all. It means the actual meaning might will be ambiguous, but you’ll definitely know how the speaker is feeling and it can be said more accurately than in most any other language. It’s also very pretty as a script.

Where I see it as particularly interesting is using it along side a native language to either add context or clarity to something that’s already written or have it be intermixed, mid sentence, with the native language as necessary - similar to how Regex and SQL are used in programming, sprinkled into other code written in a more capable but less in specific purpose language.

Frankly, I find it remarkable how well this analogy work out. Both programming DSLs and some engineered conlangs (such as Rhasodaic) share the common attributes of being purpose-driven, having a specific grammar and syntax, and - provided enough experience with them - are more concise for their application than the more general purpose alternatives.

This really got me thinking about what else we could do to make specific languages for talking or writing about different subjects. Arguably this is already common as systems for expressing trade knowledge are common,

-

Musician → musical notation

-

Electrical engineer → circuit schematics

-

Chemical engineer/Chemist →chemical drawings

But if we think about each of those, there are tools for working with them to make it easier than writing/drawing them by hand,

-

Musicians can use digital audio workstations or musical composition software which allows them to playback the music as they alter the composition.

-

Electrical engineers will use schematic entry software and simulation software such as circuitjs

-

Chemists have various tools for entry, like chemdraw

Of course, there are many tools out there and I don’t think it’s unreasonable to consider each a different dialect, some more closely related than others.

Bringing this back around, what if we made software packages for language entry? Something that does more than just grammar and spell check but rather helps us consider how to think about complex ideas. Definitely not like Grammarly, sorta like Chat-GPT, but really I’m thinking about more tools like prosodic (GitHub)

################################################ ## welcome to prosodic! v1.5 ## ################################################ >> [0.0s] prosodic:en$ When I was a young boy 000001 when P:'wɛn S:P W:H 000002 i P:'aɪ S:P W:L 000003 was P:wɑz S:U W:L 000004 a P:eɪ S:U W:L 000005 young P:'jʌŋ S:P W:H 000006 boy P:'bɔɪ S:P W:L >> [7.43s] prosodic:en$ /parse text parse meter When I was a young boy when|I|was.a|YOUNG|boy wswwsw

Or Catala which is more-or-less used the other way around: to translate legal documents into an intermediate semi-human readable format for verification of the ideals behind the law that can then spit out code in typical for working with data as outlined by the laws. The obvious use case being for taxation.

I think there’s still a lot of room for advancement here. For example, imagine if we could have a sort of “craft an emotion” dialogue that can help people write Rhapsodic?

Math #

Knowing the audience for my website, you were probably mentally screaming above that I didn’t mention the most obvious domain with it’s own language: Math.

Personally, I think the definition of what should be considered language should be pretty board. It clearly includes this text itself and most of the writing systems above. It obviously includes speech, braille, sign language, and I think most would agree that both math and music constitute “language”.

Now, this isn’t to say there aren’t some definitional lines. I don’t think I’d consider a video a language - a medium on which multiple different languages can be expressed, sure - but not a language itself. The line can get blurry though, especially as you dive into data visualization and interactive content.

Regardless, I think it’s worth considering how interesting math is as a language. I could tell you about how it’s a “universal language”, but is that even true? Probably more so than for music, but not entirely: some languages alive today do use base 20 to varying degrees - French only kind of does, while Dzongkha, for example, is true base 20 - but if we ignore that, different symbols for the same things, and any system that’s just modern math but without ⠀⠀⠀⠀ then, yeah, universal I think is fair. When the Cosmic Calls were made in ‘99 and ‘03, we made some new symbols for displaying mathematics, but sent a message that we thought could be reconstructed to unambiguously show that intelligent life had sent the messages. I highly recommend reading about it (PDF) .

What makes this interesting is the scope of what mathematical notation can convey in ways that most natural languages really struggle to do cleanly. For anything even remotely complicated to be understood it is to difficult for most mere mortals to process math without writing down anyway. For example if I say,

“One method for computing pi is to take the square root of twelve time the infinite sum, from zero to infinity, of the fraction whose numerator is the quantity negative one third raised to the index of the sum and whose denominator is the doubled index of the sum plus one.”

I would think most people would have to write it down to have the slightest idea of what I just said, where if you’re already used to reading mathematical notation,

\(\pi ={\sqrt {12}}\sum _{k=0}^{\infty }{\frac {(-{\frac {1}{3}})^{k}}{2k+1}}\)\(\LaTeX\) was used to format this math, it's pretty much the language for typesetting math digitally.

is comparatively simple.

Going a bit meta, the math above is rendered with \(\LaTeX\) . This means we have a pseudo-programming language, so depending on how you look at it this is language used to write a different language. I don’t think that disqualifies either as languages (or at least writing systems) after all, if you have Google Translate translate something from English to Spanish, (and it works) it’s not as if we think of the result as just “modified English” - it’s Spanish.

Yet, there is still some fun to be had in this line of thought. If I type out

“Hello World”

That string of text is transferred across the great series of tubes in a binary encoding. Does that make binary - or any base for that matter - it’s own language? What if we have some agreed upon meaning for the binary, like we do for Unicode for text? Is it a writing system, a language in itself, or neither?

Does showing an audio spectrogram qualify as a writing system? I think it can. Someone trained to do so could absolutely look at waveform or spectrogram of a recording to understand what was being said - this idea has been taken further, see Elektrum (omniglot.com) but, say you literally show the raw spectrum, should that count as a script if others were trained to write it by hand? Is having others be able to write it by hand necessary for that definition?

This quickly devolves into interpreting encodings as their own “language” which, like, I guessssss could have an argument made for, but it’s a stretch. Still, I think there’s value in the metaphor, and especially for the technically inclined, thinking about encodings and APIs as forms of communication and taking inspiration from the conlang and conscript/neoscript community can only stand to bring about clever, innovative ideas in storage, compressing, representation, and elegance. I think that’s an idea worth being open to, even if only in some double-think way where you don’t actually believe it.

How does this tie in to the current Large Language Model boom? #

I don’t know if tools like ChatGPT will be something we all use every day. I also don’t know if I like it. I have a big ol’ blog post about it, Does It Still Count As A Tool? , of which the TL;DR is that I like computers to be deterministic and to feel in control, but using natural language makes me feel like I’m losing some of that, but I also appreciate its utility.

I do wonder if there’s room (or necessity) for changes to languages and scripts to fill in some gaps here? Maybe we could use new language ideas to get more utility out of the models.

One idea that comes to mind for me is putting tone indication or other elements into the UI, that way the model can convey at least some of the emotional and facial cues that are so important to face-to-face communication.

AI systems could incorporate symbols or icons to indicate the tone of their responses. For example, a thumbs-up symbol could represent a positive or affirmative tone, while a warning sign could indicate caution or a serious tone. These visual cues help users quickly grasp the intended tone of the AI’s message, even without relying on punctuation or writing style variations.

I think applying this the other way could be interesting too. What if we had bots to tell us what the tone of a received message probably is? As an accessibility tool that could be useful. Going a little into black-mirror territory, this could even be used to intentionally filter things. If the text to be shown is interpreted as being overly persuasive and so likely to be advertising, it could be rendered in a different font so the user can be trained to know to take it with a grain (or heaping pile of) salt. This could get pretty nuts.

It’s also worth considering how conlangs can be used to abuse large language models. For example, my friend Cadey Ratio went to DefCon31 and figured out xe could get the model to repeat misinformation by talking to it in toki pona or Esperanto.

There could be some neat ideas that spring from using LLMs for creating new conlangs or understanding nearly-dead nat-langs. One idea that I really like is having an LLM make a reasonable language that no human knows but which could be analyzed from context to try to learn on the fly. This could be useful for a video game, for example, so that it’s a different language every play through. I think this could a great way to make rougelikes more fun.

For those making languages themselves, the same applies: LLMs stand to be a great resource for generating vocab in bulk or coming up with ideas. Using them to replace the human for complex, natural-ish languages will almost certainly be a flop, but it could still be interesting!

While I don’t want this to devolve into a why LLMs are cool/not-cool, I think it’s notable that they are allowing people to do things they may otherwise lack the skill to do or do quickly - like what the BlenderGPT or Spellburst can do. While these are interesting, I think there’s a lot more to come, if people can open up to using it for more than a chat bot , search, summarization, and essay writing utility. That is, if people can come up with more actually new ideals for how to use them, and I think exploring using conlangs and neoscripts with LLMs might at least be a baby-step in that direction.

Finally, ignoring the conlang-centric-ness of this page for a moment, Maggle Appleton’s LM Sketchbook concepts are the first things I’ve seen where an LLM could really actually make even non-fiction writing significantly better and really acts like a tool instead of trying to replace the author outright.

Completely new forms of expression #

Language and the symbols we use to communicate influence the way we think and how we approach problems. If we think of instruments as a writing utensil of sorts, the difference between a guitar and a piano are more than just their sound it’s how we interact with them leading to some expressions being easier and harder.

I’ve already mentioned Rhapsodic allowing for much more emotional expression than existing languages (albeit at the cost of other functions) but I also love how the musical sequencing programming language ORCΛ pushes boundaries of creative expression in music. I’d love to see more tools like this applied to other interests and artistic endeavors.

More powerful tools #

If I can get you to buy the idea that programming languages are just domain specific languages in the context of broader human language, that would mean that we can consider unconvential not-what-you-normally-think-of-when-you-think-about-programming-languages-languages as languages too, at least if you squint a little.

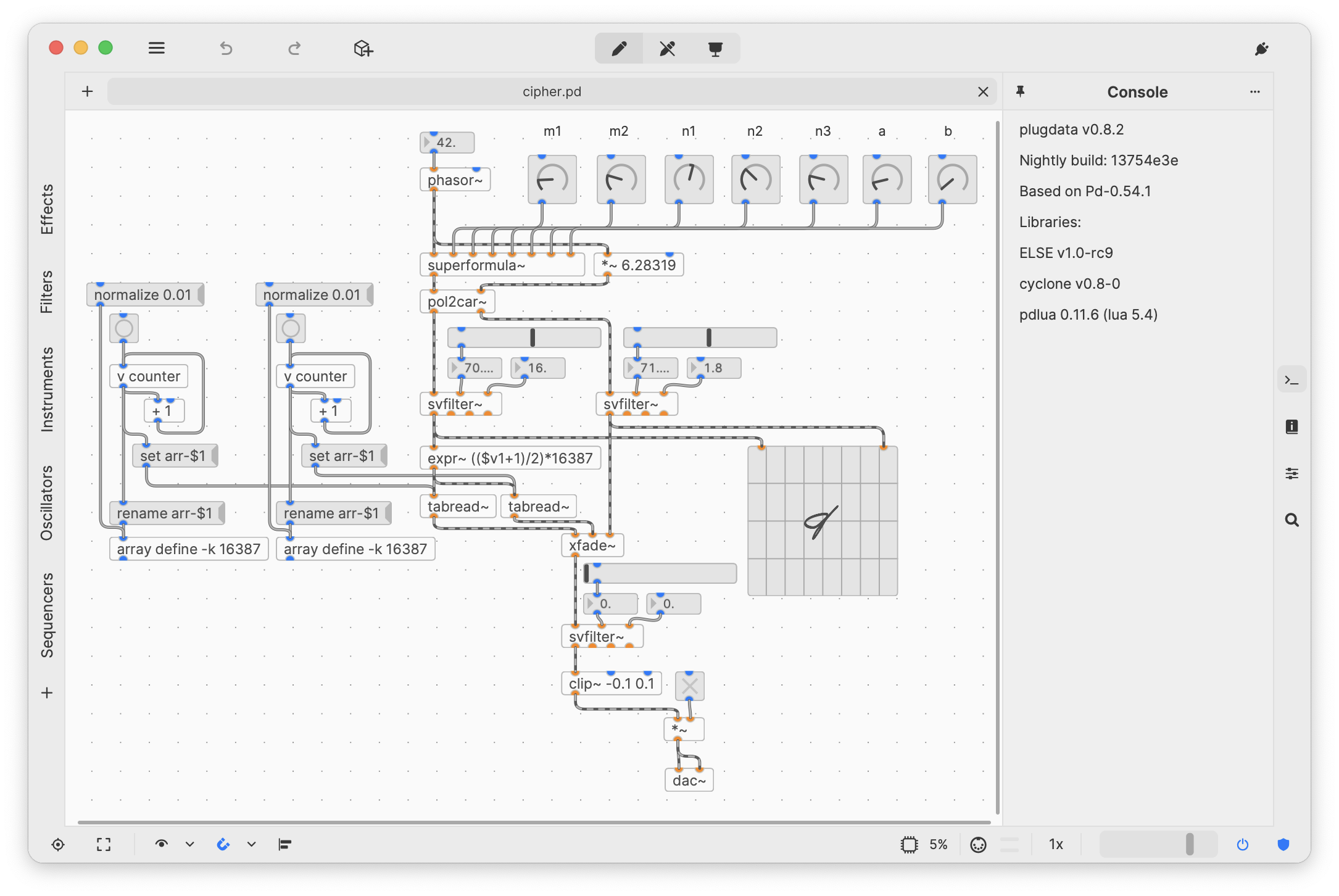

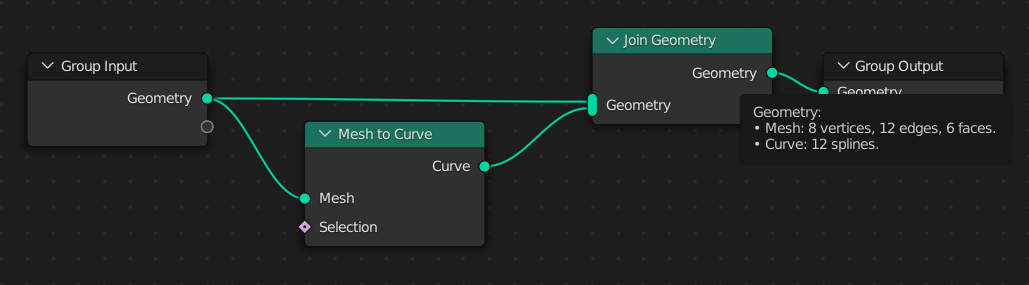

By this I mean things like Pure Data or Blender’s Geometry Nodes:

(This is actually plugdata, a fork of Pure-Data)

In these, it’s not the uncommon to take a whole collection on nodes and collapse them into a box that can be reused. In english we’d usually either name them with a verb in the name (Like Join Geometry above) or make it an -ify or -ize. If we’re already making up new -ify and -ize words, we’re only one step away from making it a new language anyway.

In any type of programming (node based like this, or normal text-basd), one thing we have to deal with a lot is naming functions and data. We sort of end up creating our own little languages for each project, where a foo_bar_comparator takes in a foo and bar. What a foo and bar even are, as a concept, is defined by the programmer and eventually we end up abstracting these ideas so much that new things just … exist. We accept the names as new terms.

Take things like “the internet” or “a CD” - even if we know what CD stands for (Compact Disc) we don’t think of it as that anymore. It’s just… a CD. The internet isn’t some vast network of inter connected computers it’s just the internet. We’ve abstracted these ideas to the point we just accept them as broad concepts, becoming new words in their own right.

If we lets users do this as they work calling groups of complex ideas a new term and letting them work with these components, we can expand upon this natural process of levels of abstraction.

Taking this further, each user can optimize their typing using text expansion software. This means doing things like replacing commonly typed words and phrases with smaller strings of letters. For example, you could make it so that typing “brb” replaces those letters with “be right back”. This can be taken to extremes like the Compress tool from Erik Schluntz helps you do. Or you could go all in and learn Stenography, which is more-or-less the same idea on steroids

By allowing users to really dig in and extend the functionality of programs by defining new concepts that make sense in their workflow and then letting them reuse those ideas later tools that are made to be general purpose can evolve with the user’s needs and experience to become more powerful for exactly how they use it - this is what “power users” of most any program always want.

I, for one, love the idea of having my own personal language for interacting with the tools I use at the speed of thought.

Languages For Interoperability #

While I’ve beaten the “universal language” dead horse already (music, math) I do think language can serve as a method of allowing for interoperability so long as you restrict it enough - like programming languages and shells do.

If you don’t have experience using a shell (Like Command Prompt or Bash) Imagine you have a program called read. All this program does it output the text of the file provided to the display, so you could run the command

read my_short_story.txt

Now imagine you have a command called replace which replaces one word with another in any text piped into it. By piping, I mean it can take the output of a previous command and pass it to the next one. To do this operation, lets use the | character. So, we could run

read my_short_story.txt | replace teh the

to correct any mistyped “teh” into the word “the”.

Now imagine you have a whole suite of more intersecting commands, ones that let you look for patterns like using [bc]at to find the word “bat” or “cat” but not “rat”, or letting you count the number of lines, or convert the file into a PDF. You can make these large chains out of existing programs, which is pretty sweet. This is more-or-less how using a command line on a MacOS or Linux system works, and as you can imagine, getting used to it isn’t totally disimilar from getting used to a new language. Honestly, with the amount of jargon in the command names (effectively a vocabularly) and rules for joining commands (not too disimilar from a grammar), it may as well be already.

Why are we not pushing boundaries? #

Defaults are powerful. It’s why United States v. Microsoft Corp. in 2001 was a problem in the first place. It’s why making it take even one more click to not accept cookie on a website works. The easiest, quickest option is what most people use, and when more people use it, it becomes harder to justify trying something new. Is QWERTY the best keyboard layout? I use Dvorak, so for me the answer is no, but every time I sit down at somebody else’s computer and try to type it’s incredibly disorienting. That’s the cost of not using the default.

So, while there are quite a few reasons things aren’t changing all the rapidly, the biggest is defaults. When it became easy to type Emoji, people used them. If there were a dedicated key for ≠ or ± on your keyboard, you’d probably use them more and programming languages wouldn’t have to use != for “not equal”

Similarly, for many things not using the default would break compatibility with something. There are millions of computers out there that still today are running Windows 98 because they’re driving some industrial or medical equipment manufactured 20 years ago. Would it work on Windows 11? Maybe. Does anyone want to risk the downtime of trying? Hell no. Similarly, there are many cases when someone has made something that is miles ahead of everything else, but breaks all compatibility assumptions in the process. I’d love to run a Linux system using Cat9 and Durden, but the early adopter cost is too high.

For conlangs or anything requiring more than simple character rendering, there has to be application support. While some things Unicode becoming standard more-or-less everywhere has helped (We can finally, reliably, know that using the ◌́ for Résumé will actually work almost everywhere) some natural scripts and many, many conlangs need features that are either difficult to implement such as:

- Having previous characters change depending on the next Like Arabic does: هذه جملة مثال

- Using a variable height line to connect words, like Sticks

- Having each word in a sentence being drawn on a line so that a space is not a space, like in Ogham: ᚛ᚁᚔᚃᚐᚔᚇᚑᚅᚐᚄᚋᚐᚊᚔᚋᚒᚉᚑᚔ᚜

- Combining some characters (or whole words) into single characters

- Being animated, like Timescript

- Using color for meaning, like ColorHoney

- Using a grid, like Eganes, Griddy Script, or Betamaze

Among many other, much weirder things. Not all text fields will support everything. If someone is writing a novel and they don’t have an easy way to make a font for their conlang that can render nicely in the text editor they’re using - or, worse, something the publisher can’t print - they might choose to use something that’s easier.

If natural languages aren’t even supported very well, asking for good conlang support is obviously not going to be a priority for anybody. Computers may be amazing, but the success of standards does sometimes limit innovation.

You know pen and paper still exists, right?

Well, yeah, no shit. But humans like sharing their art and especially for relatively small communities with little to no local groups being able to interface digitally is a big deal. Obviously this is true for conlanging and neography communities. To do that they need tools with support for making complicated scripts that use lesser-used features you might find in various font and rendering standards like OpenType in a way that is affordable (font creation software ranges from awful and free to mediocre and expensive as hell) and intuitive.

This has lead to a lot of conlangs that use the latin alphabet (often with a lot of diacritics) and while that’s not inheritly a bad thing, it’s also unfortunate that doing something with more flair isn’t easier.

Outside of font creation tools, there are also some tools out there for purpose built for conlanging:

- PolyGlot - Used for organizing Lexicon, Phasebook, Grammar, etc.

- akrantiain - “A domain-specific language designed to describe conlangs’ orthographies”

- lexifer - Word Generator

- Lexicanter - Used for organizing Lexicon, Phasebook, Grammar, etc.

- VulgarLang - Language Generator

unfortunately, many are either abandoned, extremely difficult for the less technically inclined to setup and use, or very limited. Still, it’s awesome that these exist!

Reflecting on change #

I think it can be easy to see conlangs as useless beyond art (Which, again, is enough to justify them on its own). Sure, if we count some programming languages as conlangs and consider some of the scripts that have specific applications - like for pixel art - we could salvage a few as “useful” but I think it’s also important to remember that all languages evolve pretty rapidly and we just sort of accept it. Some of what we’re used to now looks almost conlang-like if you squint at it right:

- Emoji

- Hashtags

- tone markers (/s)

- memes

These things aren’t really any one language - many of them keep their meaning regardless of the language they’re mixed in with. They’re conlangs used as domain specific languages that we sprinkle into our conversations. I don’t see how using a little toki pona or Rhapsodaic should be any different, if we could do so as easily and create enough cultural awareness for their meaning.

Conclusions #

I wrote this page both as an introduction to conlangs and as a “here’s what is possible if we think about language holistically” thing, the former point is really summarized by this meme. They stand on their own merits as incredibly works of artistic expression and that is independently more than enough to motivate their study I think.

Still, I want to put a bow on the more technical point I was trying to make:

When we think about language as any expression starting in the mind of one person and being passed to another and the influence of each choice along the way we can think about it a system instead of disparate parts.

Each language has a syntax that prescribes word order and sentence structure, a writing system (or multiple), a script (or multiple), and a way the script is written (be it by hand or into a computer).

A language may be for conveying general purpose ideas (English, Spanish, what you think of when someone says “language”) or it might be for a specific purpose - be it programming, music, etc.

While languages themselves are tools, the tooling for using the language is just as important to its usefulness.

Our tools are not perfect and, especially given the rise of large language models and developments in user interfaces (such as the proliferation of node-based editors), may be seeing significant changes soon.

The conlangs and neoscript communities have been thinking about these things for a lot longer than the AI Large Language Model crowd and almost all creative endeavors could benefit from trying to absorb even a fraction of what conlangers and neographers have to offer.

Before I wrap up, I want to say there’s an insane number of scripts I was unable to link here that I thought were more than deserving of being featured. It’s also notable that the only language I speak fluently is English, so my ability to even appreciate the scripts and conlangs more heavily rooted in other cultures is significantly more limited. This has lead me to not feature many of the beautiful scripts that are heavily inspired by existing, natural Arabic, Asian, or braille scripts because I may not be the best judge of their quality. If you’d like to see more, I highly encourage you to dive into this yourself and take a stroll though the conscript world without my biases filtering it.

Resources #

Obviously this page is littered with links to omniglot.com and I can not express enough gratitude to Simon Ager for all the effort that must go into maintaining such an incredibly large resource.

Additionally, both r/conlangs and r/neography have some great posts and is awe inspiring to scroll through each.

In writing this page I read The Art of Language Invention By David J. Peterson and if you want a jam packed resource on the linguistic skills and concepts that go into conlanging, I can’t recommend it enough. It is more of a text book than a gentle stroll though conlanging though.

If you’d like more sort of overview of the conlanging culture, existing languages, etc. - similar to my goals with this page - you may want to watch the documentary Conlanging: The Art of Crafting Tongues which, unlike this page, actually had a budget. It’s a fantastic watch and acts a sort of language focused behind-the-scenes look into a few of the biggest franchises which have used conlangs on screen.

Other links you may want to check out include:

A special thanks to these people for helping me revise this page:

- jan Usawi

- Phlegm