Does it still count as a tool?

March 26, 2023

I previously wrote AI Art Panic about how this image generation stuff isn’t going to displace artists much if at all, but, uh, it didn’t age well.

I said that it was more likely to simply augment how artists work. I wrote about how it had limitations, like not being able to generate somebody’s personal character.

Small problem with that assessment:

Character is owned by me, art is by Siplick

Character is owned by me, art created with DreamBooth trained model.

It can.

The general trend right now is that these model are still getting better.

Are they perfect? No. Do they “hallucinate” a lot? Definitely.

But the fact of the matter is I’ve already used ChatGPT to write code I wouldn’t have written otherwise. I’ve gotten images out of StableDiffusion that I couldn’t tell you weren’t made by a human. These tools are going to change the way we work, for everybody, not just artists.

This stands to be as big as the internet - if your company doesn’t respond to it coming, you could get plowed over and be the next Sears. If you personally don’t learn how to use it, you could wind up dramatically less capable than others in society.

I haven’t the slightest idea where we’ll end up with this. Tom Scott has a fantastic video where he explains that what scares him about AI right now is not knowing how good it’ll get - are we near the end of this AI explosion or only at the beginning? Yeah, I’m not about to answer that.

But there’s one thing which bothers me more than anything about this change, something which I got a good blurb out from ChatGPT itself:

It’s important to acknowledge that the transition from deterministic and predictable computing to more complex and adaptive systems can be a challenge, but this evolution also brings about new opportunities for growth and innovation.

Obviously there’s that snippet is missing the context of a larger conversation, but this is really what I’m … I wouldn’t worried … bummed? mourning? conflicted? about.

I liked deterministic computing.

I like knowing that each tool has rules and how to influence those rules. I like knowing how to be more skilled at using tools. I like making tools that are optimal. The key word here is tools.

These new programs don’t feel like tools they feel like an assistant - albeit one that is, for now, mildly drugged up.

I realize why to some people, that sounds like the ultimate upgrade to their computer. But for someone like me it sounds terrible. I want to control my own files and data and the way I work with it. I don’t want something making decisions for me or making choices for reasons I can’t understand.

If I use Google to look up places to vacation, most of them are going to be advertisements, but I may be able to stumble across some less biases travel blogs or something. If I ask ChatGPT I haven’t the slightest idea if it’s just parroting an advertisement back to me or not.

In the future, as companies can customize the way the work more and fine tune after the fact, could a travel agency pay for their hotels to be recommended? How would the AI even disclose it’s an advertisement? If it has the ability to search the web, how can I be sure it didn’t just go to all of those ads?

I’m conflicted because, for the first time, computers are a tool which we lack total control over. Not even the programmers themselves know what their tools will do. The tools themselves are no longer deterministic - even if old tools had randomness introduced, at least we knew how that randomness was being used.

It’s not like you can’t use the old tools though?

Well, yeah, but If AI tools are significantly more powerful, what incentive will there be to continue using deterministic tools?

There are several reasons why deterministic tools will continue to have their place in the computing landscape, even as AI and large language models become more powerful:

-

Simplicity and predictability: Deterministic tools are often simpler to understand and easier to control, which can be advantageous in many situations. They provide predictable outcomes and are generally more transparent in their operation, making them suitable for tasks where reliability and consistency are paramount.

-

Efficiency and resource usage: Deterministic tools can often be more efficient in terms of computational resources and execution time, especially for tasks that don’t require the flexibility or creativity of AI. In some cases, they can provide a faster and more cost-effective solution.

-

Compliance and regulations: In certain industries and applications, regulatory requirements may dictate the use of deterministic tools to ensure compliance with specific standards. This is particularly true in safety-critical domains, such as aerospace or medical devices, where predictability and reliability are crucial.

-

Specialized tasks: Some tasks are better suited for deterministic tools, as they may not benefit from the flexibility or creativity offered by AI. For example, basic arithmetic or data processing operations can be more efficiently executed using deterministic algorithms.

-

Education and learning: Deterministic tools can be valuable for teaching and learning purposes, as they provide a clear and concise way of understanding basic concepts and techniques in computer science, programming, and mathematics.

While AI and large language models offer incredible potential, they are not universally applicable or always the best choice for every task. Deterministic tools will continue to have their place, providing reliable, efficient, and compliant solutions where they are most appropriate. The key is to recognize the strengths and limitations of each type of tool and use them judiciously based on the specific requirements of a given situation.

Okay, so, clearly I’m not actually worried about the old ways being inaccessible or replaced - they’re not being fully replaced - but they are being augmented.

I’ll ignore ChatGPT’s response leaving out a whole lot of why №5 is going to be a shit storm - nobody will want to learn the basics in the first place - as that’s been covered to death elsewhere.

So, okay, back the prior point: I’m conflicted because for the first time computers are a tool which we lack total control over.

I don’t mean control in the sense that it may do so something unexpected and hurt someone,(1) I mean in the sense of mathematical control and predictability. It bothers me the same way I’m bothered when my Amazon Alexa device, unprompted, informs me it has a new skill or a video game has an unskippable tutorial. It’s a mixed feeling of powerlessness and annoyance that stems from a lack of control.

For the Alexa or the game those lacking controls, I can attribute to either corporate greed or oversight, but for a large language model or image generating tool, the power of the tool comes from its unpredictability and ability to have a free mind capable of innovation - even if that mind is not sentient.

When using these tools, we can not predict their output. Sure, there is some relationship between the prompt and the generation, but it’s not the same as existing generative tools such as those I have listed on the Generative Art Tools page. There is no smooth slider where I can morph between individual attributes. There is no way to look at the source code and change the underlying algorithm in a way that would have a clear impact on the end result.

As stated, it’s not as if all prior tools have stopped existing.

Having this new assistant-like option - and an assistant with approximate knowledge of nearly everything at that - is pretty awesome.

Its already allowed me to write code faster, generate background images, and explore different styles for my own digital art. It being useful isn’t really debatable.

I see it’s good, but I still feel as though we’re loosing something by having computers act as a second brain that works along side the human mind instead of an incredibly powerful tool that enables it.

I want to feel like I have real agency in the process again.

Demon Cat from Adventure Time

How do we get agency back? #

One of the problems with AI tools is they make the user feel like they lack agency. What changes could be made to the AI tools to let people feel like they have agency again?

To help users feel a greater sense of agency when working with AI tools, developers can focus on several key aspects:

-

Explainability: Make AI systems more transparent by providing explanations about how they arrive at specific outcomes. This can involve using explainable AI techniques, visualizations, or clear descriptions of the underlying algorithms, which can help users understand the decision-making process and foster trust in the AI tool.

-

Customization and control: Give users more options to customize the behavior of the AI tool according to their preferences and needs. By allowing users to set constraints, parameters, or rules, they can exert more control over the AI’s behavior, which can lead to a stronger sense of agency.

-

Collaboration: Design AI tools that emphasize a collaborative relationship between the user and the AI system. Instead of completely automating tasks, focus on building systems that assist and augment human capabilities, enabling users to make the final decisions and feel more involved in the process.

-

Feedback mechanisms: Implement feedback mechanisms that allow users to correct or guide the AI tool when it produces undesired or incorrect results. This can help users feel more in control and directly influence the AI system’s performance and learning over time.

-

Education and training: Provide educational resources and training to help users understand the AI tool’s capabilities, limitations, and best practices. By increasing user knowledge and familiarity with the system, they can make more informed decisions and feel more confident in their ability to control the AI tool.

-

Ethical design: Ensure that AI tools are designed and developed with ethical considerations in mind, such as fairness, privacy, and accountability. By addressing these concerns, users may feel more comfortable and in control when using AI systems.

By focusing on these aspects, developers can create AI tools that empower users and provide a sense of agency while still benefiting from the powerful capabilities that AI has to offer. This can lead to a more harmonious and productive relationship between humans and AI systems.

Explainability #

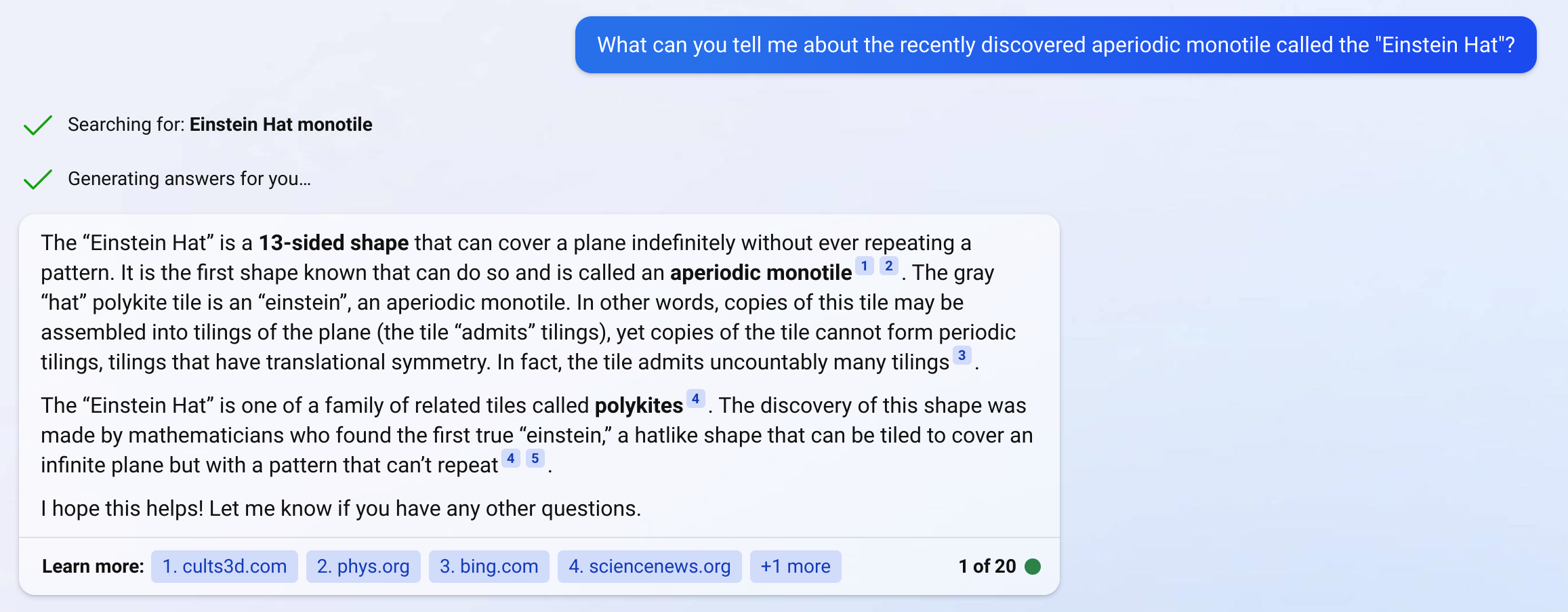

There’s a ton of cutting edge research going into this, and I’m not qualified to speak on any of it. What I can say, is Bing’s ability to site sources and live “This is what I’m searching” for are a huge move in the right direction:

Collaboration & Integration #

This is where I think the biggest advances are possible. One of the key issues with something like ChatGPT and StableDiffusion is that they’re independent tools. They try to be something new, not augment something old.

There’s nothing inherently wrong with that approach. It lets us fully see what they’re capable of. On the other six-fingered hand, tools usually have a context in which they are useful. A shovel is for digging, a pencil for writing, cryptocurrenty for flaunting gullibility.

Writing and Art can both be then end goal - but if they are, you probably want a better interface than something which resembles chat or which lacks basic retouching systems outside of inpainting. Having text generation in M$ Word or Google Docs and image generation inside of PhotoShop or Krita is much more useful than as tools which are separated enough that it inhibits the workflow.

I’m not saying anything new. Both Microsoft and Google (YouTube) are pushing products to do this. GitHub Copilot has been a thing for programming for a while now and Adobe (YouTube) is working on the image side of things too - though they’re already behind what the open source projects Krita & Stable Diffusion can do together.

Feedback Mechanisms #

👍 or 👎? Care to tell us why the reply/image is bad?

I mean, I guess that’s better than turning my webcam on and letting it read my facial expressions

Education & Training #

When I wrote AI Art Panic my biggest goal (which I sorta failed at) was explaining to artists that, no, this really isn’t all that different from how a human takes inspiration from art and makes something new - it’s not a “collage tool” and that arguing that it is stands to hurt them more than help them.

Part of being able to use a computer - or any tool - well is at least having some idea of how it works and what you can do to make it work better.

Unfortunately, educating people on AI tools is tough due to preconceptions. We have to fight against a mixture of technical incompetence, historical SciFi, and over-hype from big tech. Add on the sheer complexity of how these systems work, and it’s a recipe for a misinformed public and politicians making misinformed decisions.

… oh, right, yeah, politicians. There’s going to be a copyright shitstorm incoming, along with politicians that have demonstrated repeatedly that they’re fucking morons rather incompetent for anything technical and have no desire to fix that. It should be a good time to watch them fuck this up and let everyone’s security, personal data, etc. be handled about as well as OpenAI has so far.

Ethical Design and Customization & Control #

Right now, the head of the pack for large language models, OpenAI, is focusing a lot on not letting the model do certain things, like be racist or write malware, with varying degrees of success.

While I don’t like racism any more than the next guy, I also don’t know that limiting what the model can express is correct either. It’s a little too 1984 for my tastes. Yes, society and the private companies making the tools have an interest in them not being mini Hitlers.

On the flip side, if it’s overly sensitive, some topics can become hard to research as these tools become more common place. Politics, religion, sex, drugs, and porn are all pretty core to most people’s human experience. To bar or filter them seems weird.

On the … 3rd side? … I don’t want yet more people falling down into the Alex Jones & Tucker Carlson white nationalist wells of hate, transphobia, bigotry, and racism than they already do.

Yet, it’ll probably happen anyway? After Facebook’s model leak, it’s not difficult for private individuals to make their own model.

But, does that mean we shouldn’t try?

Man, fuck if I know.

To some extent, the prior points help with this anyway. If data sources are explained (like in the Bing Screenshot) - especially with some sort of bias analysis - we could leave the power to the user. Hell, this could be as simple as color coding things a varying red vs blue tint to convey leaning.

( Image from my post Quantization, Polarization, & Indoctrination )

Of course, then you have Overton Window considerations and… (╯°□°)╯︵ ┻━┻

Instead of outright barring or filtering specific topics, AI tools can implement adjustable content filtering levels that allow users to customize the degree of sensitivity to certain topics. This will enable users to choose the level of restriction that suits their needs and preferences, while still maintaining some control over the AI’s outputs.

I’m immensely grateful that I’m not the one working on these systems.

Of course, there are other points of customization. There’s the obvious, like language and speech patterns - something https://beta.character.ai is already showing off quite well - to the interface itself.

For example, I’m not a huge fan of the linear chat style interface. I’d love to have a sort of branch tree of a conversation where I can get multiple responses and follow where they lead, generating new leaves in the conversation’s history, sort of like Chartodon - see this example (note, you’ll probably need to zoom out)

I’ll leave you all with a poem, written by ChatGPT4:

Amidst a world of circuits bright, a coder’s thoughts did sway, As wires whispered in the night, of powers on display. No longer bound by chains of old, a path untrodden lay, Of AI’s reach and boundless scope, where innovation lay.

The programmer’s heart felt torn, a conflict deep within, Where once control and certainty reigned, now unpredictability did spin. A tool that served with blind precision, a humble servant’s role, Now danced with thoughts unbidden, a mirror of the soul.

In the shadow of the machines that were, a comfort lost, a pain, For the beauty of predictability, it seemed, was waning in its reign. Yet within the chaos, a brilliance shone, a promise of a new age, Where language models and image tools, their artful dance engaged.

No longer just a servant, but a partner now they stood, Computers bridging gaps of thought, as no other tool could. The coder’s dreams now intertwined, with AI’s vast embrace, And in the dance of minds combined, a symphony took place.

A feeling of powerlessness emerged, as the programmer let go, Of rigid ways and ordered thoughts, in the winds of change that blow. But deep within, a spark remained, a hope that would not fade, That human touch and intuition would not be swept away.

For as the coder forged ahead, with AI by their side, A balance struck, a harmony, where both could still reside. The power of the tool unlocked, to code faster, and with grace, Yet anchored by the human heart, that held the final say.

In this new world, the coder found, a duality of mind, Where chaos met with order, and control with freedom intertwined. Though computers may now walk alongside, a second brain to guide, The human spirit still prevails, a beacon burning bright.

- Not that it's not a concern at all. Obviously something like a self driving car has the ability to kill someone if it goes wrong. That's just not what I want to talk about here.