9 - Probability/Stats #

Why, where are these used, etc.

bring up music things, part failure rates, tolerances, etc.

Basics #

For the following, I’ll be using a die roll example, where the events are the total of two die. The Sample Space of this is

\(S = \{2,3,4,5,6,7,8,9,10,11,12\}\)Note, that 1 isn’t possible as the lowest is both die being ‘1’.

Let’s look at the probability of these outcomes,

| die2↓, die1→ | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

While there are 11 (2-12) unique outcomes, there are 36 possible outcomes from the two die, which are shown in the table above.

If you follow the diagonal you can see that there is only one way to get 2 or 12, two ways to get 3 or 11, and so on, this gives this table:

| Total of two die | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Probability | 1/36 | 2/36 | 3/36 | 4/36 | 5/36 | 6/36 | 5/36 | 4/36 | 3/36 | 2/36 | 1/36 |

Now, let’s say we want the probability that we roll an even total (2,4,6,8,10,12) we can just sum their respective probabilities, so \(\frac{1}{36}+\frac{3}{36}+\frac{5}{36}+\frac{5}{36}+\frac{3}{36}+\frac{1}{36} = \frac{18}{36} = \frac{1}{2}\)

Let’s go ahead and call this event A, so P[A] = 1/2

Similarly, we can define a new rule, Event B, such that the roll total is greater than 9, that comes out to be 1/6, so P[B]=1/6

Statistical Independence #

Event A and event B are statistically independent if and only if (iff) \( P[AB] = P[A]P[B]\)

So, here, P[AB], that is the probability that a number is both greater than 9 and the number is even, that would be 1/9. The probability of each event multiplied together, \(P[A]P[B] = \frac{1}{2} * \frac{1}{6} = \frac{1}{54}\) and, hopefully obviously, that’s not the same as 1/9. Therefore, these events are not statistically independent. This makes logical sense, if you know that the total count of the two die is greater than 9, then you also know that there’s a higher chance that the result is even - of 10,11, and 12, 2/3 of the totals are even. That is dependence.

If instead, we asked, what is the probability that 1 die is a 6 and the other a 2, both of those would have a probability of 1/6, that is \(P[A]=P[B]=\frac{1}{6} \) , so now think, in the combined scenario, P[AB] would be the probability of die 1 being a 6, and die 2 being a 2, well, there are 36 different ways the die can land, and they’re all unique (assuming the die are labeled somehow) so \(P[AB]=\frac{1}{36} = P[A]P[B] \) - these events are statistically independent.

It’s worth noting, the physical relationship is not always this clear. In a lot of situations, you’ll just need to do the math and determine if \(P[AB] = P[A]P[B]\) to check.

Conditional Probability #

Sometimes, knowing something about one event tells us something about the probability of another event. This can be expressed mathematically. When written down, it looks a bit gross, but it’s actually really easy to understand:

\(P[A|B]P[B]=P[AB]=P[B|A]P[A]\)This also provides another equation, just by moving things around: \(P[A|B]=\frac{P[AB]}{P[B]} \)

Here, P[A|B] means, the probability of event A happening given event B has already happened.

So, looking at just one side of the above equation, we have \(P[A|B]P[B]=P[AB]\) , this reads as “The Probability of A given that B has happened times the Probability of B is equal to the probability of an event that is in both A and B”

The other side of this equation, is just swapping the roles of A and B.

So, let’s go off the above example and assume that you’re really bad at showing up for work:

| Late For Work P[A] | Lost keys P[B] | Both P[AB] |

|---|---|---|

| .25 | .15 | .05 |

So shoving these values in to the above, we can determine that

\[\begin{aligned} P[A|B]\times P[B]&=P[AB]\\ P[A|B]\times .15&=.05\\ p[A|B] &= .33 \end{aligned} \]So, if you lose your keys, you’ll be late to work 1/3rd of the time.

Alright, but what about a more complicated situation, one where you have to make multiple decisions! Let’s

| Box | Box 1 | Box 2 |

|---|---|---|

| Red Ball | 90 | 30 |

| Blue Ball | 10 | 70 |

Keeping the numbers simple here, let’s say you want to know the probability that the ball you picked was from box1, given that you’ve already drawn a blue ball. This is where a Tree diagram comes in handy:

This lets us figure out the conditional probabilities super easily, as all that’s needed is to look at the respective branch. For example, in the above, we can see P[Red|Box1] is 9/10. Another nice thing is by multiplying across the branches we can get the probability of the entire ‘system’ easily. Note the ‘,’ instead of a ‘|’ in the diagram below. This is saying that these have both happened, not implying conditional probability. That’s why adding all of these up will add up to 1 (100%) as it’s a look at all the possible events.

So now we know the probability of a blue ball overall: 0.4 (.05 + .35 )

[TODO] Why Bayes Rule is nicer with odds (YouTube, 3b1b)

\(P[A|B]=\frac{P[B|A]P[A]}{P[B]}\)Applying this, we can see that we get

\[\begin{aligned} 0.1&=\frac{P[B|A]0.4}{0.5}\\ (0.1/0.4)*.5&=P[B|A]\\ P[B|A]&=.125 \end{aligned}\]so, there’s a 12.5% chance that the box we picked the blue ball from was Box1.

Just to check ourselves, what’s the chance that the box was Box2?

\[\begin{aligned} 0.7&=\frac{P[B|A]0.4}{0.5}\\ (0.7/0.4)*.5&=P[B|A]\\ P[B|A]&=.875 \end{aligned}\]And this works out, adding to 100%.

There are a few more things to note regarding conditional probability:

- if P[A|B] is 0, the two events are mutually exclusive. This happens in dumb situations like “Given you’ve rolled a 2, what is the probability you rolled a 3” but also more complex events may mean that this is less obvious, so it’s nice to be able to math it out.

[TODO]

Tree diagram with more branches, some ‘incomplete branches’

fair/unfair coin:

/C1-H-H-H \C2-H-H-H

Sub experiments & Tree diagrams

Counting methods

combinations and permutations

Looking at binary, arrangements of bits

with or without replacement

Random Variables #

Probability Mass Function (PMF)

Types of RV’s

[TODO] http://www.math.wm.edu/~leemis/chart/UDR/UDR.html

Bernoulli #

Geometric #

Original Image by Skbkekas - Own work, CC BY 3.0, link

In probability theory and statistics, the geometric distribution is either one of two discrete probability distributions:

-

The probability distribution of the number X of Bernoulli trials needed to get one success, supported on the set { 1, 2, 3, … }

-

The probability distribution of the number Y = X − 1 of failures before the first success, supported on the set { 0, 1, 2, 3, … }

Which of these one calls “the” geometric distribution is a matter of convention and convenience.

Binomial #

Original Image by Tayste - Own work, Public Domain, link

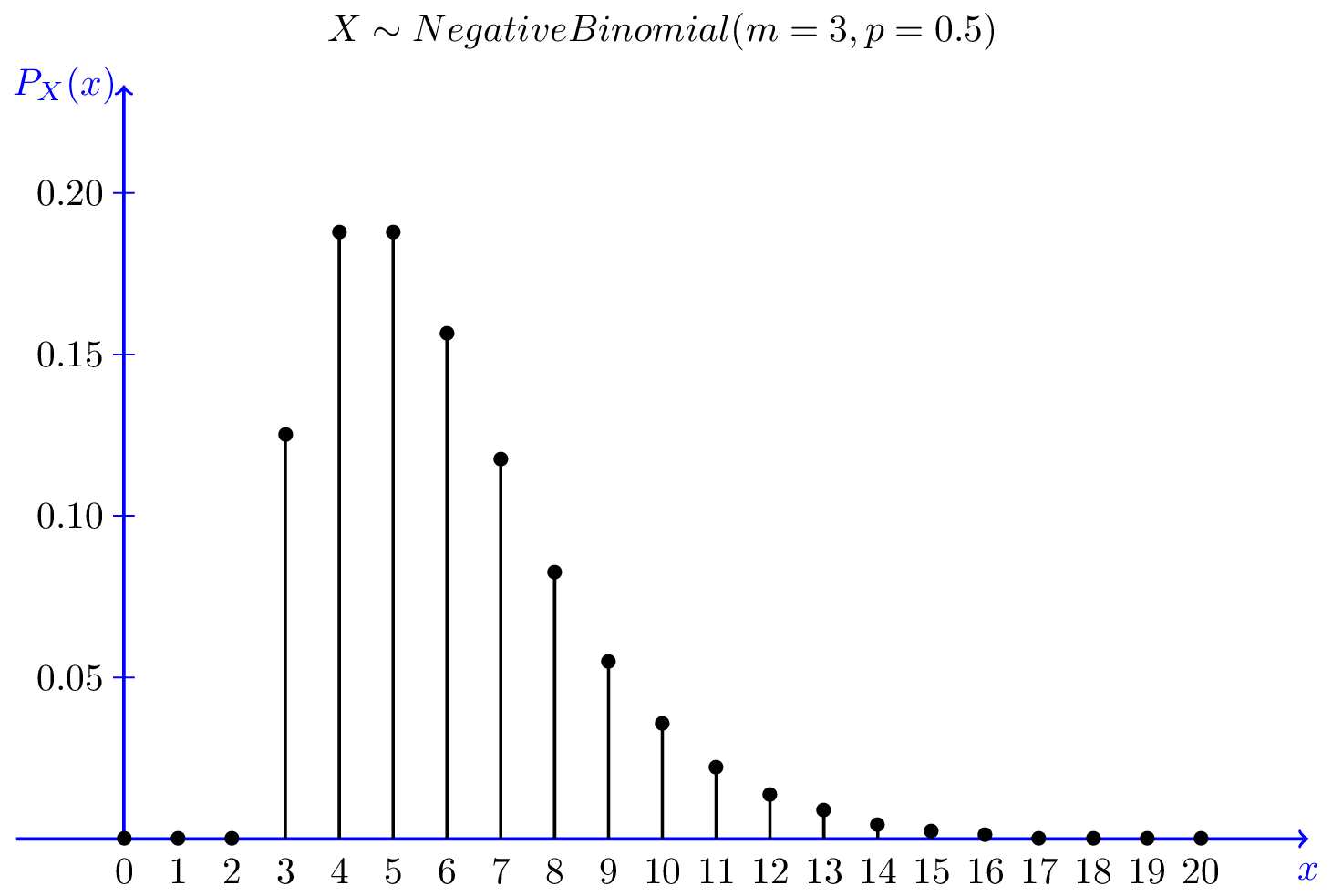

Pascal (Negative Binomial) #

From Introduction to Probability by Hossein Pishro-Nik, CC BY-NC-ND 3.0 … technically I’m abusing the license a bit, but the ‘derivative’ here is just a CSS invert, you can open the image in a new tab to see the ‘original’

Discrete Uniform #

Original Image by IkamusumeFan - Own work, CC BY-SA 3.0, link

Poisson #

Original Image by Skbkekas - Own work, CC BY 3.0, link

Cumulative Distribution Function (CDF)

functions of Random Variables

Families of continuous RVs

Conditional Probability Mass Fn & Conditional Expected Value

Gaussian Random Variables / Normal RVs (same thing)

[TODO] Gaussian Processes From Scratch (Peter Rlnts)

[TODO] https://mc-stan.org/users/documentation/

[TODO] The Fisher Information (YouTube - Mutual Information)